Today I ran into a problem with TLS (SSL) Record Sizes causing the performance of my site to be sluggish and slow. The server was doing a good job of sending large messages down the client, and I am using a late-model version of the OpenSSL library, why would this happen?

HTTP and TLS both seem like streaming protocols. But with HTTP, the smallest sized “chunk†you can send is a single byte. With TLS, the smallest chunk you can send is a TLS record. As the the TLS record arrives on the client, it cannot be passed to the application layer until the full record is received and the checksum is verified. So, if you send large SSL records, all packets that make up that record must be received before any of the data can be used by the browser.

In my case, the HTTP-to-SPDY proxy in front of my webserver was reading chunks of 40KB from the HTTP server, and then calling SSL_Write() for as much of that data over SPDY (which uses SSL for now). This meant that the client couldn’t use any of the 40KB until all of the 40KB was received. And since 40KB of data will often incur round-trips, this is a very bad thing.

It turns out this problem surfaces more with time-to-first-paint than with overall page-load-time (PLT), because it has to do with the browser seeing data incrementally rather than in a big batch. But it still can impact PLT because it can cause multi-hundred-millisecond delays before discovering sub-resources.

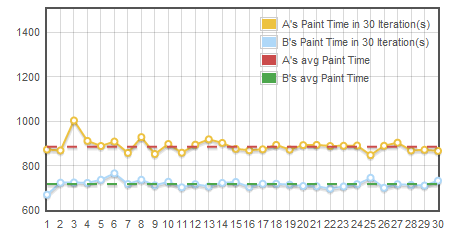

The solution is easy – on your server, don’t call SSL_Write() in big chunks. Chop it down to something smallish – 1500-3000 bytes. Here is a graph comparing the time-to-first paint for my site with just this change. Shaved off over 100ms on the time-to-first-paint.