This post is definitely for protocol geeks.

SPDY has been up and running in the “basic case” at Google for some time now. But I never wrote publicly about some wicked cool possibilities for SPDY in the future. (Much to my surprise, it may be that someone is doing them today already!)

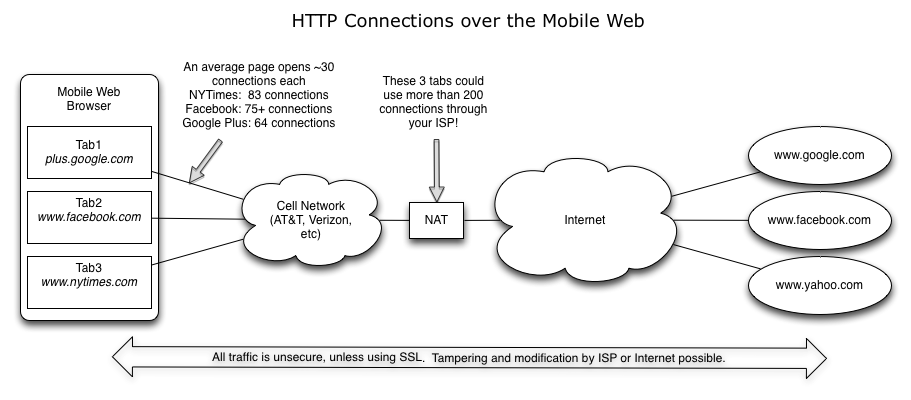

To start this discussion, lets consider how the web basically works today. In this scenario, we’ve got a browser with 3 tabs open:

As you can see, these pages use a tremendous number concurrent connections. This pattern has been measured both with Firefox and also with Chrome. Many mobile browsers today cap the connections at lower levels due to hardware constraints, but their desktop counterparts generally don’t because the only way to get true parallelism with HTTP is to open lots of connections. The HTTPArchive adds more good data into the mix, showing that an average web page today will use data from 12 different domains.

Each of these connections needs a separate handshake to the server. Each of these connections occupies a slot in your ISP’s NAT table. Each of these connections needs to warm up the TCP SlowStart algorithm independently (Slow Start is how TCP learns how much data your Internet connection can handle). Eventually, the connections feed out onto the internet and on to the sites you’re visiting. Its impressive this system works very well at all, for it is certainly not a very inefficient use of TCP. Jim Gettys, one of the authors of HTTP has observed these inefficiencies and written about the effects of HTTP’s connection management with ‘bufferbloat’.

SPDY of Today

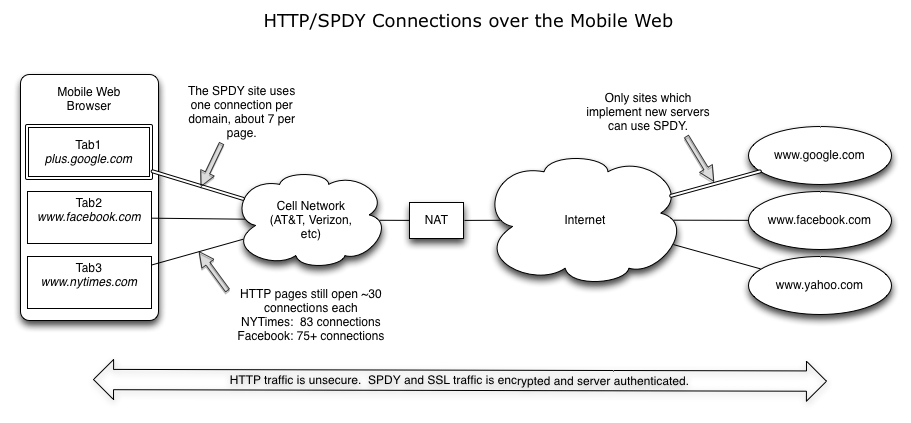

One first step to reduce connection load is to migrate sites to SPDY. SPDY resides side by side with HTTP, so not everyone needs to move to SPDY at the same time. But for those pages that do move to SPDY, they’ll have reduced page load times and transmitted with always-on security. On top of that, these pages are much gentler on the the network too. Suddenly those 30-75 connections per page evaporate into only 7 or 8 connections per page (a little less than one per domain). For large site operators, this can have a radical effect on overall network behavior. Note that early next year, when Firefox joins Chrome implementing SPDY, more than 50% of users will be able to access your site using SPDY.

SPDY of the Future

Despite its coolness, there is an aspect of SPDY that doesn’t get much press yet (because nobody is doing it). Kudos for Amazon’s Kindle Fire for inspiring me to write about it. I spent a fair amount of time running network traces of the Kindle Fire, and I honestly don’t know quite what they’re doing yet. I hope to learn more about it soon. But based on what I’ve seen so far, it’s clear to me that they’re taking SPDY far beyond where Chrome or Firefox can.

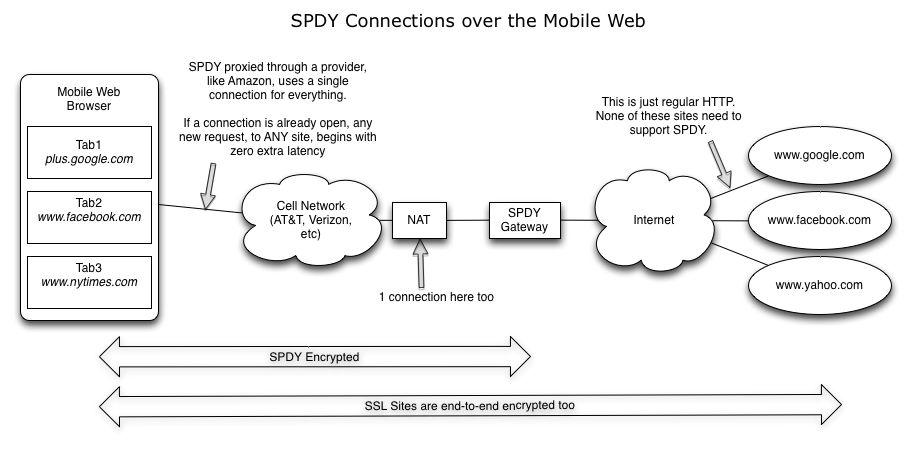

The big drawback of the previous picture of SPDY is that it requires sites to individually switch to SPDY. This is advantageous from a migration point of view, but it means it will take a long time to roll out everywhere. But, if you’re willing to use a SPDY gateway for all of your traffic, a new door opens. Could mobile operators and carriers do this today? You bet!

Check out the next picture of a SPDY browser with a SPDY gateway. Because SPDY can multiplex many connections, the browser can now put literally EVERY request onto a single SPDY connection. Now, any time the browser needs to fetch a request, it can send the request right away, without needing to do a DNS lookup, or a TCP handshake, or even an SSL handshake. On top of that, every request is secure, not just those that go to SSL sites.

Wow! This is really incredible. They’ve just taken that massive ugly problem of ~200 connections to the device and turned it into 1! If your socks aren’t rolling up and down right now, I’m really not sure what would ever get you excited. To me, this is really exciting stuff.

Some of you might correctly observe that we still end up with a lot of connections out the other end (past the SPDY gateway). But keep in mind that the bottleneck of the network today is the “last mile” – the last mile to your house. Network bandwidth and latencies are orders of magnitude faster on the general Internet than they are during that last mile to your house. Enabling SPDY on that link is the most important of them all. And the potential network efficiency gains here are huge for the mobile operators and ISPs. Because latencies are better on the open internet, it should still yield reduced traffic on the other side – but this is purely theoretical. I haven’t seen any measure of it yet. Maybe Amazon knows 🙂

More Future SPDY

Finally, as an exercise to the reader, I’ll leave it to you to imagine the possibilities of SPDY in light of multiplexing many sites, each with their own end-to-end encryption. In the diagram above, SSL is still end-to-end, so that starting a SSL conversation still requires a few round trips. But maybe we can do even better….

Great stuff Mike.

SPDY is just as enticing / exciting on the “back end” too; for long-haul transit inside of a CDN, for example, or for machine-to-machine for the “Web API” case (we did this a lot at Yahoo!).

The new SPDY architecture sounds good, but what about the age old Head of Line blocking issue when a single connections is handling everything?

How is video treated different from the static content?

Don’t get too fixated on 1 total connections vs 2 total connections. I would expect implementors to discover situations when two do indeed help. But it won’t need 10, 20, or 50, like what we’re seeing today.

As for video, its not handled differently.

HTTP proxies have existed for a very long time, as well as mechanisms to propagate those proxies to the end user like wpad.

Usage of them seems to be in decline.

Squid at least, used to be heavily used within organizations and schools, and polipo (much lighter, lower latency) is widely used in home routers and as an interface to tor.

Polipo, at least, attempts to ‘hammer down’ multiple requests into a single http 1.1 stream, which it does, mostly successfully.

There is another advantage to web proxies in general – you can do protocol translation (ipv6 to ipv4 or vice versa) over them. (I do that all the time – I run ipv6 pretty much exclusively and access the web via a polipo proxy)

Another other huge advantage of proxies on top of wireless is that you are no longer issuing dns queries over the wireless, which suffers from head of line blocking itself in our bufferbloated era, and you don’t have to worry about packet loss in the dns system. Also the proxy – usually located at the bottleneck link – can *maybe* make better decisions about the available bandwidth on both sides of the link.

But all that said, when a proxy breaks, it’s really, really annoying.

Also, squid at least, is high enough latency to make it unusable in today’s highly dynamic web sites when they are trying to be dynamic. (gmail for one example is very slow through squid, and polipo, while tolerable, was not much better when last I tried)

And while I’m encouraged about spdy, I’m curious is work going on to make spdy work right with these existing proxies? Or will something new be needed?

Proxies have indeed been a thorn for a long time. This is a good topic for a whole another post!

The primary reason I dislike proxies of the past is that they are implicit proxies, rather than explicit proxies. What is the difference, you ask? An implicit proxy can be placed in the middle of your communication stream to do whatever it wants – without your permission. Sometimes, proxies are used for good purposes, like adding better caching or adding additional security features. But sometimes they are used for things you simply don’t want – like blocking content, reducing bandwidth when you didn’t want them to, or simply spying on your traffic.

The great thing about moving to SSL for everything is that these proxies can still exist, but the user must agree to use them in order for them to tamper with your data stream. In other words, they transformed from implicit to explicit.

For existing proxies (the implicit ones), everything still passes through, but the proxy can’t tamper with the stream. It becomes mostly irrelevant.

So technically, SPDY *does* support proxies, just as HTTP did. It’s just that the user now gets the opportunity to “opt-in” before they can work. Some critics of SPDY complain that it breaks proxy caches. This is simply not true – proxies have never been able to cache encrypted content, so SPDY didn’t “break” anything.

Back to this topic – the idea of using SPDY proxies to radically reduce the number of connections – this really is a unique point to SPDY. While we could have implemented SSL HTTP proxies in the past (no browser ever bothered to implement SSL auth to the proxy itself prior to SPDY), it wouldn’t have done anything to help with the number of connections. And adding an SSL handshake to your proxy for 50 concurrent connections could actually have hurt a lot in terms of performance and efficiency.

I’m glad you brought this up – its worthy of more discussion – SSL authentication to your proxy is pretty awesome stuff.

This proposal would perform terribly over cellular networks or other noisy connections.

TCP uses exponential back off; when one packet drops, TCP cuts the performance of the entire connection in half. This makes sense if dropped packets are a sign of traffic congestion. But it has catastrophic results when you hop on the train, go through a small tunnel, and try to continue using your TCP connection.

Need to finish write up my notes from Jon Jenkins’s talk at Velocity EU – it really opened my eyes to the possibilities that SPDY opens

One of the things Amazon are doing (or planning to do) is out of order downloads e.g. user requests a website and while the Amazon proxies retrieve the page from the origin server, they can start pushing any cached resources (images / css / js etc.) they have for that page down to the user, then multiplex the page html in when they’ve got it.

The Amazon proxy model seems like a great opportunity for mobile operators to improve browsing speeds for their customers.

Is there any research into how SPDY performs over 2G/2.5G/3G networks which of course aren’t native IP?

To state the obvious what we need to see is more people implement it in proxies and servers – Strangeloop are about the only people I can think of who’ve implemented it.

@danfabulich: TCP troubles with halving cwnd on losses is well known and needs to be addressed. But, is it better to have 100 connections going through that same process? The problem with lots of little connections is that each one of them backs off independently. And the key here is understanding the type of losses. Are the losses correlated? Or random? From all the experts that have looked at it – we generally believe losses (such as those on a train) are highly correlated – meaning that one loss is indicative of multiple losses. If it is indeed correlated, then having 100s of connections going through this retry process, rather than one, makes the situation worse.

But, overall, I agree with your assessment…. The TCP layer needs a lot of work here. Fortunately, people are looking at it quite heavily.

Keep in mind, however, that we’re peeling an onion here. HTTP’s extreme network inefficiency makes it very hard (impossible?) for TCP to fix this problem. By moving to something more sane, we start giving the transport the ability to fix its problems too.

FYI – you can create URLs to specific charts in the HTTP Archive. Here’s the chart for average number of domains per site: http://httparchive.org/trends.php#numDomains

My Kindle Fire arrived. I connected to a wifi hotspot on my laptop running tcpdump in order to see the network behavior. But when I ran that pcap file through pcap2har nothing showed up. Ah hah – SSL! How do you see the HTTP traffic? Can you post simple steps?

@Andy Davies: The out-of-order loading is tricky. We spent a ton of time on Chrome trying to find anything that is faster. There are many waus to measure page load times, but a couple of key ones are “time to first render” and “total time”. It’s very easy to improve total time (by doing things like sending the static resources first), but, in all likelihood, you will reduce your time to first render times (which is when the user starts seeing useful content). I was unwilling to make this tradeoff at the time.

I hope Amazon continues in that vein and publishes results if they find something key.

This is one area where TCP still hurts us. Even though we’ve multiplexed SPDY to the hilt, once you’ve handed it to TCP, TCP can only deliver in that order (head of line blocking). So you either have to not put too much data in the pipe (and risk not having a full pipe at times), or you’ll sometimes have HOL blocking. Meh. SCTP tries to address this, but the problems with SCTP are too many to mention here….

@Steve S: Tracing is hard! I didn’t dissect the kindle SSL traffic, and I don’t have abbreviated instructions for how to do it. Chrome has a mechanism where, for debugging, you can dump your SSL keys (this is safe, because you are the endpoint). But I don’t know how to do that on the kindle.

What I did was fairly trial-and-error – connect to various sites, trace the connections, notice that it doesn’t create new connections to hit new sites. However, the patterns were not consistent for me, so I don’t know *entirely* what it is doing. The thoughts in this article are theoretical about what I think they’re getting close to, but I’m not sure of the specifics. I hope Amazon will comment here or elsewhere!!!! 🙂

@steve Andy Baio detailed his use of the Charles proxy, that sounds like it might work, at http://waxy.org/2011/02/how_i_indexed_the_daily/. Another more detailed write up is at http://www.terryblanchard.com/2010/09/21/debugging-monitoring-iphone-web-requests/

@michael I did wonder about recommending Charles as it can intercept HTTPS. I guess the issue is getting the SSL keys from the Kindle Fire

Hi Mike,

Great post – very thought provoking. As one of the inventors of Mod_Gzip we know a little about speeding up the Internet so I thought I would post an equally thought provoking comment back.

I think everyone is missing the proverbial performance boat here. How about sending “less data” and making that data “more relevant”. The single biggest problem on the Internet has always been “DEVCAP” (Device Capability). You never really know what the device connecting is capable of doing, especially in the case of Mobile. So why not add more X header data to the protocol which gives you more context about Who’s at the other end, What their device is capable of, and Where they are. Now knowing all that information “ahead of time” means that you can dump a ton of JavaScript and other “stuff” and instead generate a page in real time that exactly conforms to the persons desires, device capabilities and location.

Just using the above approach could accelerate the response by 75% or more. Now throw in all the SPDY “stuff” and you’ve got yourself something that scales to every device that I connect with.

In essence – the Web that we know becomes the Web that knows us. (And privacy is easily solved – make it opt in with a privacy setting in the browser that allows you to control what is sent).

Bottom line – a vastly accelerated Internet that is personal.

Great writeup, Mike!

VPN tunneling (for those of us dinosaurs still using desktop machines) would be another riff on the amazon use case you describe, no?

@Peter – I’m not sure how you got the claim that you could reduce page load times by 75% using the devcap capabilities. I am skeptical? Can you explain more or cite data?

Pingback:The 20 best web performance links of Q4

Mike: Just wanted to say thanks. Fantastic post. I’m not exactly your intended audience. I’m a product manager, not an engineer, and admittedly, about 20% of this went over my head. But a huge part of my job is working with engineers and IT to make product decision. So even though my work doesn’t (generally) include coding, a working knowledge is essential for me to hold down a conversation with my colleagues and make sure the decisions being made are those that best serve the product. I haven’t had a chance to play with Silk but was immediately intrigued by it and how it could speed up response time.

One question, though, that’s concerned me. If SPDY requires going through a proxy such as Amazon, are we opening up additional opportunities for information about our browsing to be acquired and mined?

Mike – great article. I think you missed adding an important benefit of using SPDY – it enables intelligent prefetching where the server predicts and pushes the data without the need for the client to request for it.

It will be interesting to see how SPDY behaves over PEPs which are notoriously known to change HTTP/TCP behaviour.

Hi Mike – very interesting.

Seeking two clarifications on your 3rd figure:

1. The bi-directional arrow with “SSL sites are end-to-end encrypted too” – is this actually a two-part connection, with SSL between the client and SPDY gateway, and separate SSL between the SPDY gateway and the Web server? Or is this simply what you’re alluding to in comment 5 about “SSL authentication to your proxy”? If there’s a true “end-to-end” connection, this would preclude caching (which seems like another sizeable advantage), correct?

2. And in the event SSL is true end-to-end, does this inhibit the operation of the SPDY gateway in any other way, or would the gateway simply forward on packets based on IP addresses?

Cheers, Bryce.

Pingback:SPDY for Mobile – Good News? | Blaze.io