Microsoft published their SPDY proposal today to the IETF. They call it “HTTP + Mobility”. Here are some quick comments on their proposal.

a) It’s SPDY!

The Microsoft proposal is SPDY at its core. They’ve fully retained the major elements of SPDY, including multiplexing, prioritization, and compression, and they’ve even lifted the exact syntax of most of the framing layer – maintaining SYN_STREAM, SYN_RESET, SYN_REPLY, HEADERS, etc.

It’s a huge relief for me to see Microsoft propose SPDY with a few minor tweaks.

b) WebSockets Syntax

When SPDY started a couple of years ago, WebSockets didn’t exist. Microsoft is proposing taking existing SPDY, and changing the syntax to be more like WebSockets. This won’t have any feature impact on the protocol, but does make the protocol overall more like other web technologies.

Personally, I don’t think syntax matters much, and I also see value in symmetry across web protocols. I do think the WebSocket syntax is more complicated than SPDY today, but its not that big of a deal. Overall, this part of the Microsoft proposal may make sense. I’m happy that Microsoft has presented it.

c) Removal of Flow Control

The Microsoft proposal is quick to dismiss SPDY’s per-stream flow control as though it is already handled at the TCP layer. However, this is incorrect. TCP handles flow control for the TCP stream. Because SPDY introduces multiple concurrent flows, a new layer of flow control is necessary. Imagine you were sending 10 streams to a server, and one of those streams stalled out (for whatever reason). Without flow control, you either have to terminate all the streams, buffer unbounded amounts of memory, or stall all the streams. None of these are good outcomes, and TCP’s flow control is not the same as SPDY’s flow control.

This may be an example of where SPDY’s implementation experience trumps any amount of protocol theory. For those who remember, earlier drafts of SPDY didn’t have flow control. We were aware of it long ago, but until we fully implemented SPDY, we didn’t know how badly it was needed nor how to do it in a performant and simple manner. I can’t emphasize enough with protocols how important it is to actually implement your proposals. If you don’t implement them, you don’t really know if it works.

d) Optional Compression

HTTP is full of “optional” features. Experience shows that if we make features optional, we lose them altogether due to implementations that don’t implement them, bugs in implementations, and bugs in the design. Examples of optional features in existing HTTP/1.1 include: pipelining, chunked uploads, absolute URIs, and there are many more.

Microsoft did not include any benchmarks for their proposal, so I don’t really know how well it performs. What I do know, however, is that the header compression which Microsoft is advocating be optional was absolutely critical to mobile performance for SPDY. If the Microsoft proposal were truly optimized for mobile, I suspect it would be taking more aggressive steps toward compression rather than pulling it out.

Lastly, I’m puzzled as to why anyone would propose removing the header compression. We could argue about which compression algorithm is best, but it has been pretty non-controversial that we need to start compressing headers with HTTP. (See also: SPDY spec, Mozilla example, UofDelaware research)

e) Removal of SETTINGS frames

SPDY has the promise of “infinite flows” – that a client can make as many requests as it wants. But this is a jedi mind trick. Servers, for a variety of reasons, still want to limit a client to a reasonable number of flows. And different servers have very different ideas about what “reasonable” is. The SETTINGS frame is how servers communicate to the client that they want to do this.

I’m guessing this is an oversight in the Microsoft proposal.

f) Making Server Push Optional

Microsoft proposes to make server push optional. There is a fair discussion to be had about removing Server Push for a number of reasons, but to make it optional seems like the worst of all worlds. Server Push is not trivial, and is definitely one of the most radical portions of the protocol. To make it optional without removing it leaves implementors with the burden of all the complexity with potentially none of the benefits.

The authors offer opinions as to the merits of Server Push, but offer no evidence or data to back up those claims.

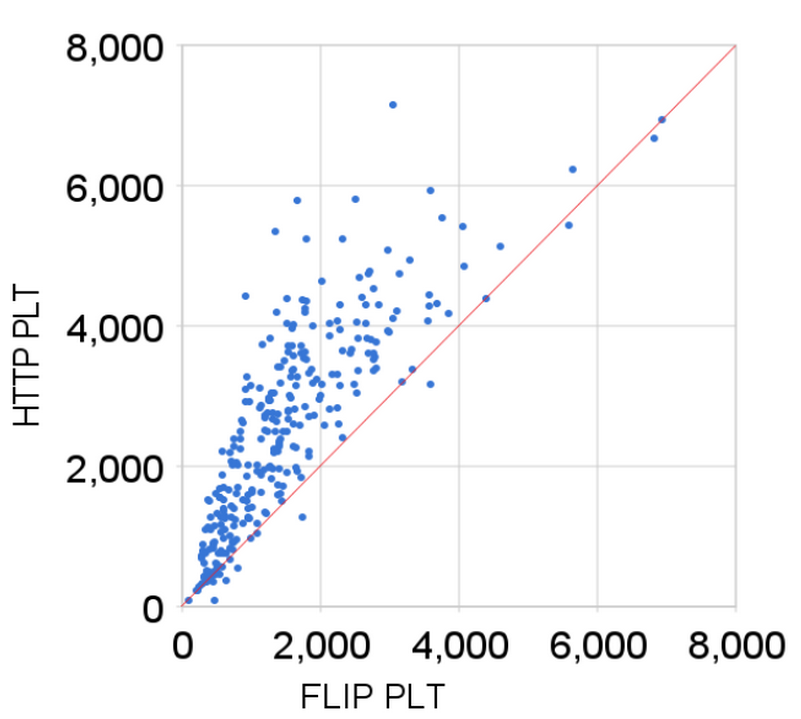

h) Removal of IP Pooling

The Microsoft writeup eliminates connection pooling, but it is unclear why. Connection pooling is an important element of SPDY both for performance and for efficiency on the network. I’m not sure why Microsoft would recommend removing this, especially without benchmarks, data, or implementation details. The benchmarks clearly show it has measurable benefit, and without this feature, mobile performance for the Microsoft proposal will surely be slower than for SPDY proper.

Conclusion

I’m happy with the writeup from Microsoft. I view their proposal as agreement that the core of SPDY in acceptable for HTTP/2.0, which should help move the standardization effort along more quickly. They’ve also raised a couple of very reasonable questions. It’s clear that Microsoft hasn’t done much testing or experimentation with their proposal yet. I’m certain that with data, we’ll come to resolution on all fronts quite quickly.